|

|

About Continuum Advertising Advisory Committee Archives Contact Us Continuum Home Faculty/Staff Subscribe related websites Alumni Association Marketing & Communications University of Utah Home |

|

|

| Chris Johnson |

Perhaps not in established areas of computer science research, according to Chris Johnson MS’84 PhD’90, director of the Scientific Computing and Imaging (SCI) Institute within the University of Utah’s School of Computing. “Lots of interesting research and innovation can still happen,” says Johnson, “but where the U’s future should lie—where we can compete effectively—is in areas between the traditional areas. The frontiers are between the standard academic disciplines, and that is where you can have a big impact.”

Interdisciplinary research is growing at universities worldwide, explains Martin Berzins, director of the School of Computing. “If you’re going to use image analysis to figure out how the brain works, or if you’re trying to understand how to provide computer software to model the hazards associated with fires and explosions, you’re not going to do it on your own,” he says. Today’s School of Computing is engaged both in mainstream computer science and in many multi-disciplinary projects with other departments, other universities, and with government and business entities.

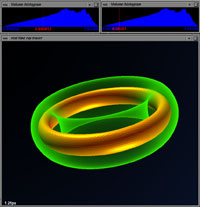

![]() For

example, researchers at the SCI Institute collaborate with biomedical

researchers to acquire and process biomedical data, then design cutting-edge

imaging and visualization tools to help scientists and physicians understand

the results. “What they did back in the early days is similar

to what we’ve been able to do at SCI,” says Johnson. “We

were breaking new ground, applying computing to biomedical applications

before it was commonplace.”

For

example, researchers at the SCI Institute collaborate with biomedical

researchers to acquire and process biomedical data, then design cutting-edge

imaging and visualization tools to help scientists and physicians understand

the results. “What they did back in the early days is similar

to what we’ve been able to do at SCI,” says Johnson. “We

were breaking new ground, applying computing to biomedical applications

before it was commonplace.”

A current project involves combining medical CT scans with computational techniques to produce detailed three-dimensional images of mouse embryos—an efficient new method to test the safety of medicines and learn how mutant genes cause birth defects or cancer. Other researchers in the School of Computing are working with the Mechanical Engineering department on advances in robotics. A long-standing collaboration involves joint work with Electrical Engineering to educate students so that they can help build future generations of computer systems.

|

Martin Berzins |

Berzins enthusiastically embraces interdisciplinary projects, but says, “You have to have something to bring to the multi-disciplinary table, so you can’t neglect your own core discipline.”

As an example, Berzins explains, “A key challenge is dealing with large amounts of data and making sense of it.” Technology allows researchers to gather a tremendous amount of data, but handling all of it can be beyond the capacity of today’s processors. The School of Computing is researching new ways to compute, analyze, and visualize the enormous amounts of data arising in biomedical and engineering applications.

“We’ve changed the educational paradigm,” says Berzins. “Our Computing degree, initiated under the previous director, Chris Johnson, allows us to put together new multi-disciplinary programs at the graduate level in a short space of time. We have programs in robotics, computing, and graphics, for example. In the future, we’d like to create more joint programs with departments such as Biomedical Informatics and to continue to grow in areas such as robotics and computer engineering.”

New Challenges

Clearly, innovation is still possible and necessary, but universities face new challenges in conducting breakthrough research.

One

challenge to multidisciplinary work is the traditional way universities

are structured. “It’s hard for universities,” says

Johnson, “because they are set up in silos of departments. When

you transcend departments, they have difficulty knowing how to evaluate

you. You’re publishing outside the traditionally defined departmental

discipline, so sometimes you’re viewed as ‘not one of us.’

” Johnson believes the hierarchies and traditions of universities

may have to change to truly take advantage of interdisciplinary work.

“To be successful, we need to change our reward structures, such

as tenure and promotion, to strongly encourage interdisciplinary research

and education. Such changes will need to have strong encouragement and

support from our top University officials.”

One

challenge to multidisciplinary work is the traditional way universities

are structured. “It’s hard for universities,” says

Johnson, “because they are set up in silos of departments. When

you transcend departments, they have difficulty knowing how to evaluate

you. You’re publishing outside the traditionally defined departmental

discipline, so sometimes you’re viewed as ‘not one of us.’

” Johnson believes the hierarchies and traditions of universities

may have to change to truly take advantage of interdisciplinary work.

“To be successful, we need to change our reward structures, such

as tenure and promotion, to strongly encourage interdisciplinary research

and education. Such changes will need to have strong encouragement and

support from our top University officials.”

Funding is another challenge. In 1970, Congress passed the Mansfield Amendment, which turned ARPA into DARPA (adding “D” for Defense) and restricted grants to specific defense-related projects, rather than innovative research and discovery. By 1975, the U of U’s DARPA funding had evaporated.

Today, funding is even harder to come by. Instead of large umbrella grants, funds are doled out in smaller amounts, for shorter durations, and for specific deliverables. Researchers spend many hours writing grant proposals. This year, the School of Computing faculty had to write many more grants to maintain the same level of research funding as the year before.

The lack of funding for long-term and “curiosity-driven” research at universities is concerning. “We’re trying to partner more with businesses and nontraditional funding sources, but that’s hard, too,” says Berzins.

“We’re still living off the investments that ARPA made back then,” says Johnson. “The economics boomed for decades from that investment.”

Describing how the lack of open-ended research money is constricting future research, he adds, “Now they’re eating their seed corn.”

“It’s a disaster, and has been for more than 30 years,” says Alan Kay MS’68 PhD’69, president of Viewpoints Research Institute, Inc. Credited with conceiving the laptop computer, object-oriented programming, and overlapping windows, Kay says, “I think government funding of fundamental research in universities was the best way to get things to happen, and still is. It is very difficult to get ‘critical mass’ funding … to support teams big enough (10 to 20) to really design, make, and test radical ideas.”

Kay does see some hope for the future, however. “A little more of the right kind of computer funding is starting to flow again, and the NSF [National Science Foundation] has recently made a few exceptions to fund more ‘far out’ proposals,” he says. “Still, the level is probably only a few percent of what it should be.”

University Research Is Still Vital

If universities can’t fund as much long-term research as they once did, and as is needed, can private industry take up the slack? From Catmull’s industry perspective, it’s unlikely. “Most companies have a short-term focus. That’s not a value judgment; it’s just a reality of business,” he says. “They’ve got to survive, so they put the smartest people on projects that have to go out now. That skews company research to be small and short-term.”

Catmull believes well-funded university programs could have a unique ability to “take a longer-term view. They can take risks and do projects that companies wouldn’t do. If you spread this across all the universities in the U.S., it’s a tremendous asset for the nation.”

Kay agrees. “If the amount and kind of funding and the height of vision would return to the levels of the ’60s, and some care was taken in choosing principal investigators, then I’m sure that similar productivity would happen again.”

The golden age of computer graphics at the University of Utah was the result of the unique alchemy of the right people, “enlightened” funding, and an inspiring environment. Simply put, a diverse mix of bright students and faculty were expected to have great ideas and do “great things,” and they were given the means to do it.

A

number of years ago, Johnson talked with several of the faculty and

students from the early days and quizzed them on how they accomplished

so much. “What they all told me,” he says, “was that

the most important thing is the people. Get the smartest, hardest-working

people you can find. Put the best, most cutting-edge facilities in their

hands. Then create a supportive environment where really smart people

can do amazing things!”

A

number of years ago, Johnson talked with several of the faculty and

students from the early days and quizzed them on how they accomplished

so much. “What they all told me,” he says, “was that

the most important thing is the people. Get the smartest, hardest-working

people you can find. Put the best, most cutting-edge facilities in their

hands. Then create a supportive environment where really smart people

can do amazing things!”

Finding funding may have become as creative and time-consuming as the research itself, but it’s still occurring. Current projects at the U’s School of Computing are leading the field in many interdisciplinary areas, and the school’s administration is committed to maintaining those leads. Granted, today’s political, social, and academic climates are much different from those of the late ’60s and early ’70s, but innovation and discovery are still essential—and possible.

—Kelley J.P. Lindberg BS’84 is a freelance writer living in Salt Lake City

Across

the United States, universities were grudgingly admitting computer science

to the curriculum, but to maintain respectability, they tucked their

programs under the reputable wings of mathematics professors. Fletcher,

then president of the University of Utah, threw caution to the wind

and hired a full-blooded computer scientist to start up his computer

science department within the College of Engineering. That scientist

was David Evans, a Utah native with a background in computer architecture

and systems software.

Across

the United States, universities were grudgingly admitting computer science

to the curriculum, but to maintain respectability, they tucked their

programs under the reputable wings of mathematics professors. Fletcher,

then president of the University of Utah, threw caution to the wind

and hired a full-blooded computer scientist to start up his computer

science department within the College of Engineering. That scientist

was David Evans, a Utah native with a background in computer architecture

and systems software.